"Stand Your Ground" - A Case for GRC:

If you've not had the opportunity to read the recent Dan Geer / Jerry Archer IEEE S&P Cleartext column titled

"Stand Your Ground," then please go read it now. It's only a single page, two-column article and it won't take you long. It is, hands-down, one of the best summaries of contemporary, leading-edge thinking on the state of infosec that I've seen.

Finished? Cool... let's continue...

Allow me to take an outlandish step and try to summarize this excellent, concise work in three bullets. I'll then try to elaborate a bit on each of these points to put things into a practicable use case.

In short, the article points out the key business imperative: survival. In order for a business to succeed and have value, it has to survive over time, enduring ups and downs, and especially being able to handle various IT-related incidents. In order to achieve this objective, Geer and Archer roughly cover three things:

1. Radically Reduce Attack Surface

2. Design For Resilience and Palatable Failure Modes

3. Automate For Manageability

Allow me to elaborate a bit on each of these points.

Radically Reduce Attack Surface

At the ISSA International Conference 2011 in Baltimore, then-FBI executive assistant director Shawn Henry not only advocated companies reducing their online presence, but also that companies should consider whether or not certain types of records should even be electronic rather than remaining in a more traditional physical form. I scoffed at the notion back then, but am now starting to come around to the idea, if ever so slightly.

Part of the reason I've started to come around is because of things like

this story in Business Week that talks about malware targeting the private networks of airlines, potentially exfiltrating data or even manipulating flight and reservation information. Of course, we've heard similar horror stories about SCADA and ICS systems in the energy sector, where 10+ years ago these companies would turn a blind eye to infosec advice because these systems weren't internet-connected, but today almost brag about how their operators can access and manage these systems from anywhere in the world. Kind of missing the point, eh?

In the article, the authors comment that "expansion of the enterprise's catalog of essential technologies creates unfunded liabilities," which exactly translates to an increased attack surface. Put another way, the more we rely on technology, the more likely we are to experience a very bad incident when one of those technologies becomes compromised or unavailable.

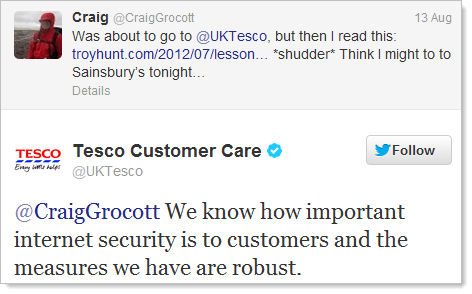

Now, some of you may be cringing at the use of the phrase "attack surface," and understandably so. Really, when talking about "attack surface," I'm implying a few things. First, it means reducing the threat and vulnerability profiles of online systems. This objective can be achieved in part through traditional patching and hardening practices, as well as by working to not draw attention to assets (e.g., don't volunteer yourself as a ready target in Washington Post or New York Times;). Second, it means restricting what systems and data are actually online and accessible. Third - and this is quickly becoming most difficult - is aggressively working with employees to help reduce what they leak in and out of the organization. BYOD policies inevitably mean allowing in hundreds of relatively insecure, unmonitored devices that can expose the enterprise to a wide array of badness, not to mention that you're also then letting people walk out with devices that have more processing power and storage than an entire office of PCs did 20 years ago.

If an asset is of high value to the organization, then it's time to evaluate what level of exposure is acceptable - and, by extension, what level of liability and loss is acceptable - and tune policies and practices accordingly. Oh, and make sure these decisions are not made in a vacuum. These are business decisions that must be made by business leaders

with input from IT, security, and risk personnel.

Design For Resilience and Palatable Failure Modes

So... you've taken the first step and removed what you could from harm's way... sadly, it's probably not nearly as much as you'd like (as a security or risk management person), but at least it's a start... now what do you do?

As part of a survivability strategy, your organization must conduct risk management planning from the perspective of assuming incidents will occur. As the NSA has done, it's time to also assume that the line between "inside" and "outside" is at best thin, and at most likely merely representative and not truly restrictive. Also, you must assume that your organization will be caught-up as part of the collateral damage (or perhaps directly targeted, depending on what your business does) in any number of online "exchanges" between government forces, spies, protestors, anarchists, etc. (pick a name/label, it'll generally fit). The point here is this: you'll never stop all potential incidents, and thus must plan for managing these incidents effectively and efficiently.

There are a few considerations around this topic... first, assuming failures will occur, you must have contingency plans (e.g., incident response and business continuity plans) in place, with people adequately trained, and procedures adequately tested and drilled. Second, when planning, designing, and strategizing, it's imperative to consider infrastructure and data resilience. That is, how well can your business continue to function despite degraded conditions? Then, lastly, it's best to attempt to build into your operations "acceptable" failure modes. Specifically, when designing and architecting solutions, evaluating ways in which the solution can fail, and help plan those failure modes as best as possible. Not only will this approach help you identify worthwhile defensive trade-offs (risk decisions), but it will also show you how your environment can break and, as a result, how you can build safety nets around those failure points to help dampen the net-negative impact. Also, don't forget to loop back through the first point on reducing attack surface as part of these design and architecture discussions, since the easiest way to avoid a data breach is to never expose it in the first place.

Automate For Manageability

Last, but not least, is the closing paragraph of the article, which advocates automating as much as possible to account for ever-changing compliance requirements, to reduce first-level support overhead, and to improve the overall efficiency of other efforts. In a nutshell, I view this paragraph - and much of the article - as a strong use case for GRC solutions (caveat:

I'm a wee bit biased).

As I see it, there are a few ways where GRC solutions help automate various duties in the enterprise, and as a result reduce some overhead burdens:

*

Managing Compliance Requirements (w/ UCF) A good GRC solution will provide a means to effectively map external compliance objectives and internally-designated requirements (such as audit/certification readiness) to a standard controls framework (like the Unified Compliance Framework), and further through to the policy framework. Organizations should be managing their own custom controls framework, and not simply acting ad hoc in response to each individual set of requirements derived from any number of standards and regulations.

*

Providing a Self-Service Policy/Requirements Interface In addition to mapping requirements to controls to policy documents, it is also then vital that this information be made available to users, and that users be given nominal training on how to access and make use of this information. Building concise, clear policies that articulate requirements and link them to their source authorities will help users understand the "why" of a given policy, which will in turn increase the likelihood that they will want to conform, and will in fact adhere to policies.

*

Automating Various Processes Many processes can be automated using tools like GRC solutions. For example, policy authoring, review, and approval processes, or even routine periodic reviews and revisions. Automated processes can also be used to track and follow-up on exception requests, various risk factors within a risk register, remediation activities around vulnerability scan or pentest findings, or exercise of business continuity and disaster recovery plans.

*

Centralizing and Coordinating Technical Security Data (addressing "big data") Much of the "big data" problem revolves not around performing analysis in a given silo, but in being able to coordinate analyses across silos. GRC solutions provide a convenient means to bridge silos to allow management, executives, and risk managers to gain a broader, more complete view of operations and the business, and to thus make better-informed decisions.

*

Managing Various Requests and Reports How many organizations have multiple methods for capturing requests or types of reports? GRC solutions today provide a central point through which these requests and reports can be gathered, processed, and tied to other related data (e.g., incident and post-mortem reports tied to vuln scan and pentest data, as well as configuration requirements that may not have been followed/met). Again, the underlying principal here is automating collection, tracking, and management of this data, as well as encouraging better self-service for users, which in turn improves participation and conformance to desired behavioral norms.

There's much more that GRC solutions can do to help, too, but this hopefully give you an idea of the legitimate role that GRC can play for businesses. Now, obviously, GRC solutions are no panacea, and still require having smart people in your organization to help set things up, manage them on an ongoing basis, and to provide that sentient human interrupt that automated processes occasionally need. Overall, though, GRC can help reduce some of the ongoing overhead costs typically associated with topics like policy frameworks, control frameworks, managing compliance objectives, preparing for routine or major audits (e.g., SSAE-16 readiness, ISO 27001 readiness, PCI compliance). And, perhaps more importantly, these solutions provide users with a self-service interface where they can quickly answer policy and requirement questions, while also identifying the provenance of requirements, such as through seeing how they tie back to audit or compliance objectives.

In Closing...

At the end of the day, all three of these points should represent a critical focus for a security or GRC team. In particular, when worked together, the net effect is to reduce the overall risk profile facing the organization, which can have tremendous positive benefits. At the same time, it also helps the business better articulate survival objectives and gain a better understanding how just what assets (i.e., people/process/technology) are truly critical to ongoing operations.

-small.png)